Navigating the Neurotechnology Frontier

Virginia Mahieu | July 2024

Neurotechnologies – devices that can read from and write to the brain – are widely considered the next frontier of medical treatment and human enhancement. They are undergoing an explosion of innovation: rapid advances in artificial intelligence, biotechnology, nanotechnology, materials science, engineering and robotics are converging to enable even more rapid advances in neurotechnologies, for both medical and non-medical purposes. This acceleration and convergence raises important ethical, societal, and legal questions. What promise do these technologies hold, and how powerful can they become? Conversely, what are their limits for mitigating human disabilities or augmenting human capabilities? And what threats could they pose to citizens and to society that decision-makers should safeguard from?

Discussions have begun at multiple levels1 to address such questions. The neurotechnology “puzzle” is commonly broken down into three pieces:

- The opportunities that neurotechnologies present;

- The possible risks they could incur;

- And the laws and regulatory frameworks that protect from these risks, while ensuring that the benefits of neurotechnologies can be maximised.

The promise of neurotechnologies: how they are being – and could be – used

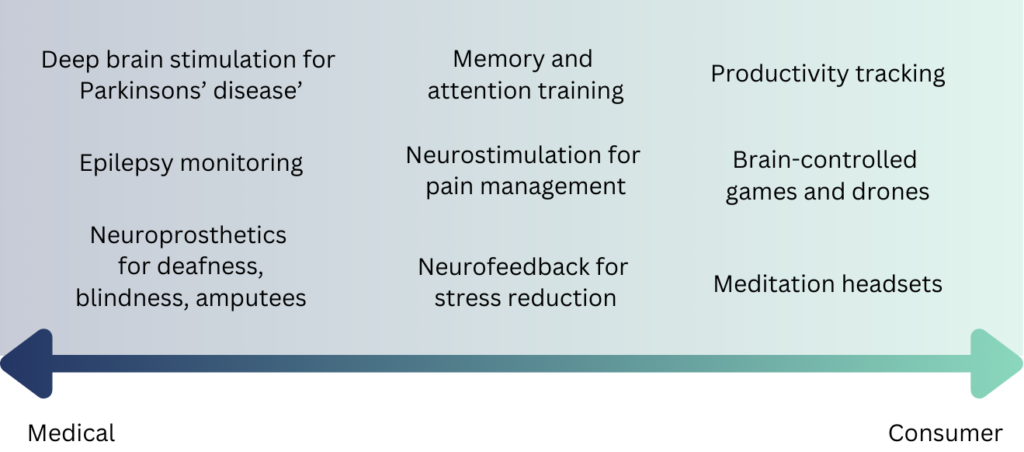

Neurotechnologies offer immense promise to treat conditions for which no other remedies currently exist. Compared to most pharmacological solutions, neurotechnologies have the appealing advantage of acting directly on their target, and their effects are often reversible. They already have a demonstrated history of success, such as the cochlear implant and deep-brain stimulation for Parkinsons’ disease and for severe depression. Within five to 10 years, implantable devices could restore sight to blind people, help those who have been paralyzed to walk again, and enable patients with severe paralysis to communicate.

Meanwhile, wearable (and, perhaps one day, implanted) consumer devices could enhance learning abilities, boost physical performance for sport or combat, and supercharge productivity. Tech giants like Meta and Apple, as well as many other companies, are already developing and patenting consumer products that would allow people to interact with computers directly via their nervous system.

Somewhere in between medical and consumer devices are those that promise to reduce stress and anxiety, and even treat migraines, insomnia, attention deficits, irritable bowel syndrome, and many other common disorders (many of which are not officially classed as medical devices or fully supported by strong scientific evidence). Furthermore, developers currently primarily working on medical applications could eventually transfer their expertise to the consumer market. This could lead to increasing blurring of the lines between medicine and commerce, with growing implications for the data that these technologies collect and process, as well as the regulations that govern it.

Neurotechnology market growth estimates hover around an annual growth rate of 12%, expected to reach $21 billion by 2026, and over $40 billion by 2032. Today, widely available and affordable neurotechnologies are able to distinguish a persons’ overall mental state – such as how alert, awake, focused, or meditative they are – by analysing their brain waves. New and more sophisticated consumer technologies, depending on just how powerful they become, could potentially infer aspects of users’ feelings, preferences and emotions, as well as be predictive of future brain disease.

Furthermore, the open source and accessible nature of many devices (which in the past were only available to highly trained scientists in research institutions), combined with significant recent advances in accessible AI tools, means that more people are empowered to come up with new and creative applications of neurotechnologies and interpretations of data derived from them2, contributing to a growing start-up ecosystem and further accelerating innovation.

Challenges and risks

First and foremost, the extremely sensitive data generated by users’ brains, which could expose their most private and intimate thoughts, could be up for grabs depending on how that data is processed and stored. Today, personal data – as well as data on how people interact with platforms – is already being used by corporations to profile people and drive content algorithms on social media. The ability to monitor and interpret brain activity could allow tech companies to gain unprecedented insights into consumers’ thoughts, preferences, and vulnerabilities, propelling the era of big data analytics and surveillance capitalism to new heights.

We must find a way to ensure that people—both patients and consumers—remain in control of their brain data, understand the risks, are informed of its processing, and explicitly consent to its use. It is crucial to draw the line of what is acceptable if companies aim to harvest and sell consumer brain data to tailor marketing campaigns and social media feeds3, as well as the desirability of mental surveillance (particularly in the workplace) and of neuro-policing in the judicial system. And of course, the security of this extremely sensitive data must be guaranteed within and across European borders.

On the societal front, the cost of productivity and cognitive enhancement devices could further exacerbate existing digital and economic divides. Issues specific to AI, such as algorithmic bias, might transfer to users of neurotechnology, leading to further discrimination. Furthermore, could brain-controlled computers exacerbate the trend of increasing mental illness among youth associated with the ubiquity of social media and internet use?

Important questions also arise on the medical front, including the potential for unanticipated secondary effects of medical implants, and the implications for patients if the companies maintaining the implants they depend upon go bankrupt.

Finally, we cannot disregard the possible implications of military innovation in neurotechnology, such as its potential applications ranging from soldier enhancement to cognitive warfare. These innovations could redefine the battlefield and the nature of conflict, raising a myriad of complex and deeply concerning implications for human rights and the security and safety of populations.

Stress-testing current and future EU legal and governance frameworks

In order for these technologies to truly benefit society, it is crucial to have public scrutiny over their development, and that the relevant governance frameworks be clear, practical, comprehensive, and ensure safety for their users so that responsible innovation can thrive. As an economic union and rights-driven international actor based on the rule-of-law, the EU is in a strong position to assert a guiding hand to maximise the benefits of neurotechnologies while safeguarding citizens and society from its possible harms. However, current regulatory frameworks, designed to deal with technology in a generic way, may struggle to address the convergence innate to neurotechnologies. Furthermore, with the accelerating pace of this field, policy-making needs to be forward-looking and anticipatory to keep up with new advances.

There are ongoing international initiatives to better understand the implications of neurotechnologies for our human rights such as efforts through the Council of Europe, the OECD, UNESCO, the UNHRC. With a European focus, we ask, does the EU’s charter of fundamental rights adequately and fully protect our individual and collective human rights to privacy, nondiscrimination, freedom of thought, freedom of expression, and even liberty and security with respect to new advances in neurotechnologies? The debate on whether the brain needs new (neuro-)rights continues, though several experts argue that existing rights are sufficient.

Whether or not additional neuro-rights are needed, regulation must be designed so that it adequately protects the integrity of the person and the brain. There is a clear need to take careful stock of the EU’s existing governance frameworks4, compare these to the current and upcoming developments in neurotechnologies, and stress-test current legislation for grey areas, weaknesses and gaps where the fundamental rights of citizens and society could be at risk. Existing legal frameworks like the GDPR and medical device regulations must be thoroughly evaluated to determine if they are equipped to handle the sensitive nature of brain data and the unique ethical considerations surrounding neurotechnologies, and European labour laws should be assessed to ensure they adequately safeguard freedom of thought and self-determination in the workplace. It may be that neurotechnology-specific regulatory guidelines need to be formulated, and made distinct from other types of medical and consumer devices. To accomplish this, we must operate on two timelines: looking at both current neurotechnologies and their immediate implications, as well as laying the groundwork for long-term developments and uncertainties in the field.

Furthermore, the societal integration of these technologies – their uptake by practitioners, patients and consumers – is a key factor to consider. At what scale and speed will new technologies be adopted? How will people of different cultures, socio-economic groups, lived experiences, even political opinions perceive and value these technologies, and the risks they could pose to society?

While there have been numerous sets of guidance documents and multilateral efforts on the ethics of neurotechnology5, including the Léon Declaration on European neurotechnology jointly signed by EU Member States, we must consider how to put this guidance into action. To do this right, we need clear strategies to ensure that the governance models of these technologies, policy formulation and implementation speak to people from all walks of life. In order to bring these three pieces of the puzzle together we need to have a values-based, participatory and inclusive approach to the EU governance dialogue on neurotechnology.

A shot from the workshop held by ICFG and IoNx in May 2024.

A shot from the workshop held by ICFG and IoNx in May 2024.Piecing the puzzle together: towards an equitable future for neurotechnology governance

ICFG was founded to ensure the ethical and safe development of powerful emerging technologies in order to enable the flourishing of current and future generations. Neurotechnologies have the potential to deeply transform society for generations to come. Thus, ICFG is building a neurotechnology program to anticipate the rapid developments in the field and the risks they could pose to society, assess the robustness of existing legislation in the EU and more widely, and explore options for regulatory review. It aims to act as a platform that brings together the many stakeholders affected by neurotechnologies in order to discuss the many questions outlined above, with the goal of ensuring that regulatory frameworks in the EU are sufficiently robust to protect the fundamental rights of its citizens.

Indeed, it is essential to ICFG’s mission and values to take a collaborative approach to generating knowledge about the development of emerging technologies and how it stands to impact our society. In that spirit, ICFG has partnered with the Institute for Neuroethics Think and Do Tank (IoNx) to generate actionable ideas to enable responsible and comprehensive governance models for neurotechnology. IoNx is the first global think and do tank wholly dedicated to understanding the ethical, legal, and social implications of emerging neurotechnologies. It leverages the collective wisdom of its specialised networks towards rigorous analysis paired with strategic action, with a key focus on co-creation and engagement.

Together, ICFG and IoNx are exploring ways to ensure that the dialogues shaping the governance of neurotechnology are guided by principles of inclusion, engagement, commitment to rigour over hype, and thorough ethical and human rights analyses.

We look forward to engaging with practitioners, experts, and impacted individuals and communities to collectively explore, analyse, and develop responsible, equitable, and inclusive approaches to neurotechnology governance.

1 For example at UNESCO, the Council of Europe, the OECD, the European Parliament, by the Spanish Presidency of the Council of the European Union, and in Chile.

2 For example, one YouTuber used a consumer EEG device to open and prompt ChatGPT when he thought about lemons.

3 France has effectively banned neuromarketing by passing a law in 2011 that “Brain-imaging methods can be used only for medical or scientific research purposes or in the context of court expertise.”

4 This work has already begun. For instance, EU Member States have signed the León Declaration on European neurotechnology, calling for the European Commission “to facilitate specialised high-level expert discussions to assess the degree to which the existing regulatory and policy frameworks, including those legislative proposals to be adopted soon, safeguard individual and collective rights in the context of neurotechnologies.” Furthermore, TechEthos, a Horizon Europe-funded project conducted legal, ethical and societal analyses of the neurotechnology landscape. It concluded by encouraging further work to be done.

5 As well as several deep dive reports such as from the UK Information Commissioner’s Office, the Spanish Digital Future Society, as well as in an IBM and US based think tank Future of Privacy Forum and reporting from the International Association or Privacy Professionals.